Litepaper

What is Decision AI and Why Decision AI ?

Decision AI is a decentralized GPU cloud dedicated to AI compute. The foundation of Decision AI is a DePIN protocol where GPU owners can supply compute resources permissionlessly, and users can run AI or other GPU-heavy workloads with simple API/SDK access. Using our cost-efficient and scalable infrastructure, we are building an open ecosystem that empowers developers to create, deploy and monetize innovative AI solutions.

Existing GPU clouds have made strides in creating an “Airbnb of GPUs” where users rent GPUs directly. However, it requires technical expertise and additional labor costs to manage the GPU machines, and it’s challenging to scale compute resources up or down on demand. These limit the adoption of GPU DePINs.

Decision AI embraces serverless compute in the core protocol design. What is serverless? It is a concept that abstracts the infrastructure away from applications. There are no virtual machines to manage, and no software framework dependencies to set up. Decision AI adopts Platform-as-a-Service (PaaS) model, similar to AWS SageMaker and HuggingFace. We provide the ability to scale resources dynamically based on real-time ever-changing demand. The heavy-lifting infrastructure management is abstracted away behind intuitive easy-to-use APIs and frontends.

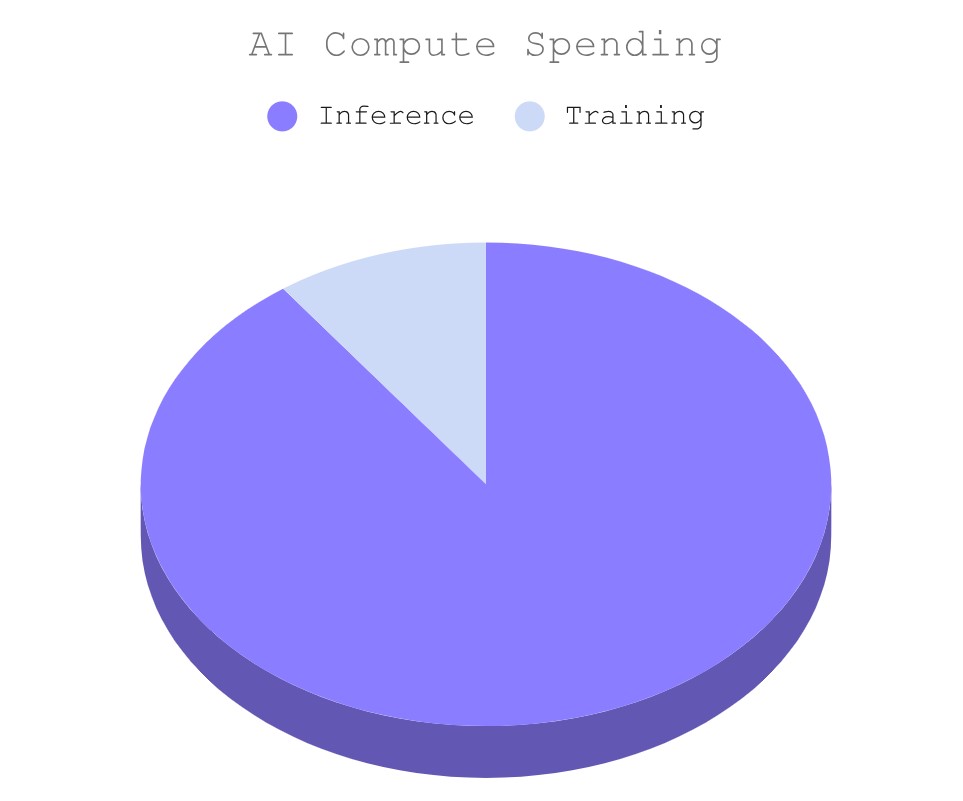

The primary focus of our service is on AI inference. It’s estimated that 90% of the compute resource consumed in an AI model’s lifecycle is for inference. Although AI training requires a large number of inter-connected GPUs clustered in large-scale datacenters optimized for network bandwidth and energy, AI inference can be efficiently executed on one single GPU or one machine with multiple GPUs. The nature of AI inference compute makes it the ideal type of workload to be deployed in a globally distributed GPU network.

A wide range of GPU compute tasks can be supported by Decision AI , not limited to AI inference, but including training of small language models, model fine-tuning, and ZK proof generation. The key is that these tasks do not require intensive communication between network nodes, and that allows us to dynamically schedule GPU resources easily.

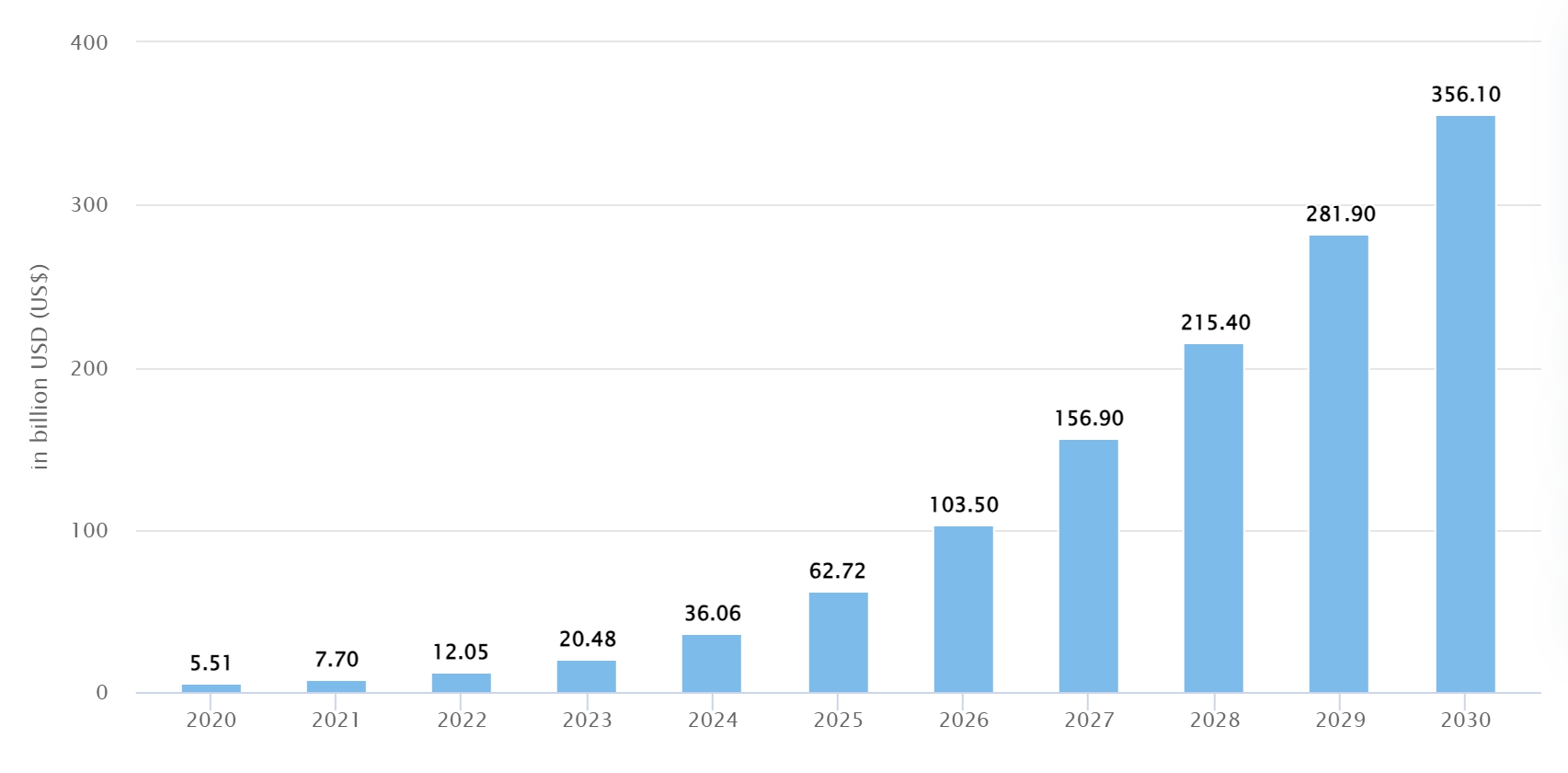

Global generative AI market growth. Source:https://www.statista.com/outlook/tmo/artificial-intelligence/generative-ai/worldwide

To demonstrate the power of Decision AI serverless GPU DePIN, Decision AI team has built multiple free-to-use AI apps and services including

Last updated